PCs are complex due to underlying hardware organisation. Consequences of this include difficulty in modifying or upgrading a PC, bloated operating systems and software stability issues. Is there an alternative that wouldn’t involve scrapping everything and starting over? I will describe one possible solution with both its benefits and drawbacks.

What (most) users want

What do you want from your computer? This is quite an open question, with a large number of possible answers. Computer users can generally be categorised between two extremes. There are those who just want to type documents, write email, play games and look at porn—all the wonderful things modern PCs make possible. They want their computer to work like their TV/DVD/Hi-Fi, turn it on, use it for purpose, and then turn it off. If something goes wrong they’re not interested in the why or how, they just want the problem resolved. At the other end of the scale there are those who love to tinker, to dig into the bowels of their machine for kicks, to eke out every last ounce of performance, or to learn. If something goes wrong they’ll read the documentation, roll up their sleeves and plunge back in.

Where do you as a user belong on this scale? As a Free Software Magazine reader you’re probably nearer the tinkerer end of the spectrum (and you’re also probably asking, what does any of this have to do with free software. Well, bear with me). The majority of users, however, are nearer the indifferent end of the scale, they neither know nor care how a PC works. If their PC works for the tasks they perform and runs smoothly, all is well—if it doesn’t, they can either call up an IT helpline or twiddle their thumbs (usually both). It’s easy to dismiss such users, many problems that occur on any system are usually well documented or have solutions easily found with a bit of googling. Is it fair to dismiss such users? Sometimes it is, but, more often than not, you just can’t expect an average user to solve software or hardware problems themselves. The users in question are the silent majority, they have their own jobs to do and are unlikely to have the time, requisite knowledge or patience to troubleshoot; to them documentation, no matter how well indexed or prepared, is tedious and confusing. They don’t often change their settings and their desktops are crammed with icons. They are the target demographic of desktop operating systems. Ensnared by Windows, afraid of GNU/Linux, they were promised easy computing but have never quite seen it. Can an operating system appeal to these users without forcing everyone into the shackles of the lowest common denominator? Can developers make life easier for the less technically oriented, as well as themselves, without sacrificing freedom or power? Can we finally turn PCs into true consumer devices while retaining their awesome flexibility? I believe we can, and the answer lies (mostly) with GNU/Linux.

This article loosely describes an idealised PC, realised through changes in how the underlying hardware is organised, along with the consequences, both good and bad, of such a reorganisation. It is NOT a HOW-TO guide, you will not be able to build such a PC after reading this article (sorry to disappoint). The goal is to try and encourage some alternate thinking, and explore whether we truly are being held back by the hardware.

The goal is to try and encourage some alternate thinking, and explore whether we truly are being held back by the hardware

The problem with PCs and operating system evolution

So what are the problems with PCs that make them fundamentally difficult to use for the average person. The obvious answer would probably be the quality of software, including the operating system that is running on the PC. Quality of software covers many areas, from installation through everyday use to removal and upgrading, as well as the ability to modify the software and re-distribute it. A lot of software development also necessitates the re-use of software (shared libraries, DLLs, classes and whatnot) so the ability to re-use software is also an important measure of quality, albeit for a smaller number of users. Despite their different approaches, the major operating systems in use on the desktop today have both reached a similar level of usability for most tasks, they have the same features (at least from the users point of view), similar GUIs, even the underlying features of the operating system work in a similar manner. Some reasons for this apparent “convergence” are user demand, the programs people want to run, the GUI that works for most needs and the devices that users want to attach to their PC to unlock more performance or features. While this is evidently true for the “visible” portions of a PC (i.e. the applications) it doesn’t explain why there are so many underlying similarities at the core of the operating systems themselves. The answer to this, and by extension, the usability problem, lies in the hardware that the operating systems run on.

Am I saying the hardware is flawed? Absolutely not! I’m constantly amazed at the innovations being made in the speed, size and features of new hardware, and in the capability of the underlying architecture to accommodate these features. What I am saying is there is a fundamental problem in how hardware is organised within a PC, and that this problem leads to many, if not all, of the issues that prevent computers from being totally user friendly.

There is a fundamental problem in how hardware is organised within a PC, which leads to many, if not all, of the issues that prevent computers from being totally user friendly

The archetypal PC consists of a case and power supply. Within the case you have the parent board which forms the basis of the hardware and defines the overall capabilities of the machine. Added to this you have the CPU, memory, storage devices and expansion cards (sound/graphics etc.). Outside of the case you’ve got a screen and a keyboard/mouse so the user can interact with the system. Cosmetic features aside, all desktop machines are fundamentally like this. The job of the operating system is to “move” data between all these various components in a meaningful and predictable way, while hiding the underlying complexity of the hardware. This forms the basis for all the software that will run on that operating system. The dominant desktop operating systems have all achieved this goal to a large extent. After all, you can run software on Windows, GNU/Linux or Mac OS without worrying too much about the hardware—they all do it differently, but the results are essentially the same. What am I complaining about then? Unfortunately, hiding the underlying complexity of the hardware is, in itself, a complex task: data whizzes around within a PC at unimaginable speed; new hardware standards are constantly evolving; and the demands of users (and their software) constantly necessitate the need for new features within the operating system. The fact that an operating system is constantly “under attack” from both the underlying hardware and the demands of the software mean these systems are never “finished” but are in a constant state of evolution. On the one hand this is a good thing, evolution prevents stagnation and has got us to where we are today (in computing terms, things have improved immeasurably over the 15 years or so that I’ve been using PCs). On the other hand, it does mean that writing operating systems and the software that runs on them will not get any easier. Until developers have the ability to write reliable software that interact predictably with both the operating system/hardware and the other software on the system, PCs will remain an obtuse technology from the viewpoint of most users, regardless of which operating system they use.

How do operating systems even manage to keep up at all? The answer is they grow bigger. More code is added to accommodate features or fix problems (originating from either hardware, other operating system code or applications). Since their inception, all the major operating systems have grown considerably in the number of lines of source code. That they are progressively more stable is a testament to the skill and thoughtfulness of the many people that work on them. At the same time, they are now so complex, with so many interdependencies, that writing software for them isn’t really getting any easier, despite improvements in both coding techniques and the tools available.

Device drivers are a particularly problematic area within PCs. Drivers are software, usually written for a particular operating system, which directly control the various pieces of hardware on the PC. The operating system, in turn, controls access to the driver, allowing other software on the system to make use of the features provided by the hardware. Drivers are difficult to write and complicated, mainly because the hardware they drive is complicated but also because you can’t account for all the interactions with all the other drivers in a system. To this day most stability problems in a PC derive from driver issues.

The devices themselves are also problematic from a usability standpoint. Despite advances, making any changes to your hardware is still a daunting prospect, even for those that love to tinker. Powering off, opening your case, replacing components, powering back on, detecting the device and installing drivers if necessary can turn into a nightmare, especially when compared to the ease of plugging in and using say, a new DVD player (though some people find this tough also). Even if things go smoothly there’s still the problem of unanticipated interactions down the line. If something goes wrong the original parts/drivers must be reinstated, and even this can lead to problems. In the worst case, the user may be left with an unbootable machine.

The way PCs are organised, with a parent board and loads of devices crammed into a single case, makes them not only difficult to understand and control physically, but has the knock on effect of making the software that runs on them overly complex and difficult to maintain. More external devices are emerging and these help a bit (particularly routers) but don’t alleviate the main problem. An operating system will never make it physically easier to upgrade your PC, while the driver interaction issues and the fact that the operating systems are always chasing the hardware (or that new hardware must be made to work with existing operating systems) means that software will always be buggy.

Powering off, opening your case, replacing components, powering back on, detecting the device and installing drivers if necessary can turn into a nightmare

If we continue down the same road, will these problems eventually be resolved? Maybe, the people working on these systems, both hard and soft, are exceptionally clever and resourceful. Fairly recent introductions such as USB do solve some of the problems, making a subset of devices trivial to install and easy to use. Unfortunately, the internal devices of a PC continue to be developed along the same lines, with all the inherent problems that entails.

We could build new kinds of computer from scratch, fully specified with a concrete programming model that takes into account all we’ve learned from hardware and software (and usability) over the past half century. This, of course, wouldn’t work at all, with so much invested in current PCs both financially and intellectually we’d just end up with chaos, and no guarantee that we wouldn’t be in the same place 20 years down the line.

We could lock down our hardware, ensuring a fully understandable environment from the devices up through the OS to the applications. This would work in theory, but apart from limiting user choice it would completely strangle innovation.

More realistically, we can re-organise what we have, and this is the subject of the next section.

Abstracted OS(es) and consumer devices

What would I propose in terms of reorganisation that would make PCs easier to use?

Perhaps counter intuitively, my solution would be to dismantle your PC. Take each device from your machine, stick them in their own box with a cheap CPU, some memory and a bit of storage (flash or disk based as you prefer). Add a high-speed/low latency connection to each box and get your devices talking to each other over the “network” in a well defined way. Note that network in this context refers to high-performance serial bus technology such as PCI Express and HyperTransport, or switched fabric networking I/O such as Infiniband, possibly even SpaceWire. Add another box, again with a CPU, RAM and storage, and have this act as the kernel, co-ordinating access and authentication between the boxes. Finally, add one or more boxes containing some beefy processing power and lots of RAM to run your applications. Effectively what I’m attempting to do here is turn each component into a smart consumer appliance. Each device is controlled by its own CPU, has access to its own private memory and storage, and doesn’t have to worry about software or hardware conflicts with other devices in the system, immediately reducing stability issues. The creator of each device is free to implement whatever is needed internally to make it run, from hardware to operating system to software; it doesn’t matter, because the devices would all communicate over a well-defined interface. Linux would obviously be a great choice in many cases; I imagine you could run the kernel unmodified for many hardware configurations.

What are the advantages of this approach? Surely replacing one PC with multiple machines will make things more difficult. That is true to some extent, but handled correctly this step can remove many headaches that occur with the current organisation. Here are just some of the advantages I can think of from this approach (I’ll get to the disadvantages in the next section).

Testing a device is simpler, no need to worry about possible hardware or software conflicts, as long as the device can talk over the interconnect, things should be cool

Device isolation

Each device is isolated from all the others in the system, they can only interact over a well defined network interface. Each device advertises its services/API via the kernel box. Other devices can access these services, again authenticated and managed by the kernel box. Device manufacturers ship their goodies as a single standalone box, and they of course have full control over the internals of said box, there are no other devices in the box (apart from the networking hardware and storage) to cause conflicts. Each device can be optimised by its manufacturer, and there’s the upside that the “drivers” for the device are actually part of the device. Testing a device is simpler, no need to worry about possible hardware or software conflicts, as long as the device can talk over the interconnect, things should be cool. Note that another consequence of this is that internally the device can use any “operating system” and software the manufacturer (or the tinkering user) needs to do the job, as well as whatever internal hardware they need (though of course all devices would need to implement the networking protocol). Consequently, integrating new hardware ideas becomes somewhat less painful too. Additional benefits of isolation include individual cooling solutions on a per device basis and better per device power management. On the subject of cooling the individual devices should run cool through the use of low frequency CPUs and the fact that there isn’t a lot of hardware in the box. As well as consuming less power this is also great for the device in terms of stability. Other advantages include complete autonomy of the device when it comes to configuration (all info can be stored on the device), update information (again, relevant info can be stored on the device), documentation, including the API/capabilities of the device (guess where this is kept!) and simplified troubleshooting (a problem with a device can immediately be narrowed down, excluding much of the rest of the system, this isn’t the case in conventional PCs). Another upside is that if the device is removed it would not leave orphaned driver files on the host system. Snazzy features such as self-healing drivers are also more easily achieved when they don’t have to interfere with the rest of the system (the device “knows” where all its files are, without the need to store such information).

Many architectures

Device isolation means the creator can use whatever hardware they desire to implement the device (aside from the “networking” component, which would have to be standardised, scalable and easily upgraded). RISC, CISC, 32 bit, 64 bit, intelligent yoghurt, whatever is needed to drive the device. All these architectures could co-exist peacefully as long as they’re isolated with a well defined communication protocol between them.

No driver deprecation

As long as the network protocol connecting the boxes does not change the drivers for the device should never become obsolete due to OS changes. If the network protocol does change, more than likely only the networking portion of the device “driver” will need an update. Worst case scenario is if the network “interface” (i.e. connector) changes. This would necessitate a new device with the appropriate connection or modification to the current device.

Old devices can be kept on the system to share workload with newer devices, or the box can be unplugged and given to someone who needs it

No device deprecation

Devices generally fall out of use for two reasons: a) lack of driver support in the latest version of the operating system; and b) no physical connector for the device in the latest boxes. This problem would not exist because as mentioned in “No driver deprecation” above the driver is always “with” the device and can always be connected through the standard inter-device protocol. Old devices can be kept on the system to share workload with newer devices, or the box can be unplugged and given to someone who needs it, the new user can just plug the box into their system and start using the device, much better than the current system of farting around trying to find drivers and carrying naked expansion cards around and stuffing them into already overcrowded cases. As users upgrade their machines it is often the case that some of their hardware is not compatible with the upgrades. For example, upgrading the CPU in a machine often means a new motherboard. If a new standard for magnetic storage surfaces, very often this requires different connectors to those present on a user’s motherboard, thus necessitating a chain of upgrades to use the new device. Isolated devices remove this problem. When a device is no longer used in a PC it is generally the case that it is sold, discarded or just left to rot somewhere. Obtaining drivers for such devices becomes impossible as they are phased out at the operating system and hardware level, and naked expansion cards are so very easily damaged. In this system there would be no need to remove an old device, even if you have obtained another device that does the same job. The old device could remain in the system and continue to do its job for any applications that needs it, and taking some load from the new device where it can. As long as there is versioning on the device APIs there is no chance of interference due to each device having self-contained device drivers. Devices can be traded easily, they come with their own device drivers so can just be plugged in and are less likely to be damaged in transit. One of the great features of GNU/Linux is its ability to run on old hardware; this would be complimented greatly by the ability to include old hardware along with new in a running desktop system, something that would normally be very difficult to achieve.

Security

Security is a big issue and one which all operating systems struggle to fulfil for various reasons. While most operating systems are now reasonably secure keeping intruders out and user data safe is a constant battle. This system would potentially have several advantages in the area of security; the fact that the Kernel is a self-contained solution, probably running in firmware, meaning overwriting the operating system code, either accidentally or maliciously would be very difficult if not impossible, and would likely require physical access to the machine. For the various devices, vendors are free to build in whatever security measures they see fit without worrying about the effect on the rest of the system. They could be password protected, keyed to a particular domain or whatever to prevent their use in an unauthorised system. Additional measures such as tamper proofing on the container could ensure that it would be extremely difficult to steal data without direct access to the machine and user account information. Of course, care must be taken not to compromise the user’s control of their own system. There are several areas of security (denial of service attacks for example) where this system would be no better off than conventional systems, though it may suffer less as the network device under attack could be more easily isolated from the rest of the system. In fact it would be easier to isolate points of entry into the system (i.e. external network interfaces and removable media); this could be used to force all network traffic from points of entry through a “security box” with anti-virus and other malware tools, allowing for the benefits of on-demand scanning without placing a burden on the main system. It is likely that a new range of attacks specific to this system would appear, so security will be as much a concern as it is on other systems and should be incorporated tightly from the start.

Despite the introduction of screw-less cases, jumper-less configuration and matching plug colours, installing hardware remains a headache on most systems

Device installation

Despite the introduction of screw-less cases, jumper-less configuration and matching plug colours, installing hardware remains a headache on most systems. With for example a new graphics card, the system must be powered down and the case opened, the old card removed and the new one seated. Then follows a reboot and detection phase followed by the installation (if necessary) of the device driver. Most systems have made headway in this process, but things still do go wrong on a regular basis, and often the system must still be powered down while new hardware is installed, and if things go wrong it can be very difficult to work out what the exact problem is. By turning each device into a consumer appliance, this system would allow for hot swapping of any device. Once power and networking are plugged into the device the device would automatically boot and be integrated into the “kernel”. No device driver installation would be necessary as all the logic for driving the device is internal to the device. If the Kernel is unable to speak to the device over the network this would generally signify a connectivity problem, a power problem or a fault with the device itself. Because devices are wrappers for “actual” devices there is no need to touch the device’s circuitry, so removing risk of damage as can happen with typical expansion cards and also removing the danger of electric shocks to the user.

Increased performance

This is admittedly a grey area, true performance changes could only be checked by building one of these things. Latency over the network between the devices will be a bottleneck for performance, the degree of which is determined by the latency of whatever bus technology is used. Where performance will definitely increase though, is through the greater parallelism of devices. With each device having its own dedicated processor and memory there will be no load on the kernel or application boxes when the device is in use (except for message passing between the boxes, which will cause some overhead). It should also be easier to co-ordinate groups of similar devices, if present, for even greater performance. In the standard PC architecture, device activity generates interrupts, sometimes lots of them, which result in process switching, blocking, bus locking and several other performance draining phenomenon. With the interrupt generating hardware locked inside a box the software on the device can make intelligent decisions about data moving into and out of the device, as well as handling contention for the device. More performance tweaks can be made at the kernel level; per device paging and scheduling algorithms become a realistic prospect, instead of the one size fits all algorithms necessarily present in the standard kernel.

Device driver development

It’s easier to specify a kernel, list of modules and other software in a single place (on the device), along with update information and configuration for the device, than it is to merge the device driver either statically or dynamically into an existing kernel running on a system with several other devices and an unknown list of applications.

With device isolation it should be a lot simpler to build features that rely on that device, without forcing too much overhead on the rest of the system

Features

With device isolation it should be a lot simpler to build features that rely on that device, without forcing too much overhead on the rest of the system. For example, a box dedicated to storage could simply consist of an ext3 file system that could be mounted by other boxes in the system. On the other hand, you could build in an indexing service or a full database file system. These wouldn’t hog resources from the rest of the system as they do in a conventional PC, and would be easier to develop and test. Experimentation with new technologies would benefit due to the only constraint for interoperability with the system being the device interconnect (which would be specified simply as a way of moving data between devices), developers could experiment with new hardware and see the results in-situ on a running system (as opposed to having to connect potentially unstable hardware directly to a parent board with other devices). Users could pick and choose from a limitless array of specialised appliances, easily slotted together, which expose their capabilities to the kernel and applications. Without a parent board to limit such capabilities the possibilities literally are endless.

Accessibility

This is still a difficult area in all major operating systems, mainly because they are not designed from the ground up to be accessible. Most accessibility “features” in current operating systems seem like tacked on afterthoughts. I’m not sure if this is to do with the difficulty in incorporating features for vision or mobility impaired users or just whether a lot of developers think it’s not worth the hassle accounting for “minorities”, or maybe they don’t think about it at all. The fact that the population as a whole is getting older means that over the next few decades there are going to be many more “impaired” users and we should really be making sure they have full access to computing, as things stand now it is still too difficult for these users to perform general computing, never mind such things as changing hardware or programming. I see a distributed device architecture as being somewhat beneficial in this regard, apart from making hardware easier to put together for general and impaired users, the system is also conducive to device development, with the possible emergence of more devices adapted for those who need them. The many and varied software changes such a system would require would also offer a good opportunity to build accessibility into the operating system from the start, which certainly doesn’t seem to be the case with current systems.

The ability to upgrade the processing power of a machine in this way is just not possible with a conventional PC organisation

Applications

The actual programs, such as email, word-processing and web browsers would run on the application boxes described earlier. To re-iterate, these boxes would generally consist solely of processors, memory and storage to hold the paging file and possibly applications depending on overall system organisation. More hardware could be included for specialised systems. All hardware and kernel interactions take place over the device interconnect, so applications running on one of these boxes can do so in relative peace. Because devices in the system can be packaged with their own drivers and a list of capabilities it should be fairly easy to detect and make use of desired functionality, both from the kernel and other devices. Another advantage would be the ability to keep adding application boxes (i.e. more CPU and RAM) ad-infinitum; the kernel box would detect the extra boxes and automatically make use of them. The ability to upgrade the processing power of a machine in this way is just not possible with a conventional PC organisation.

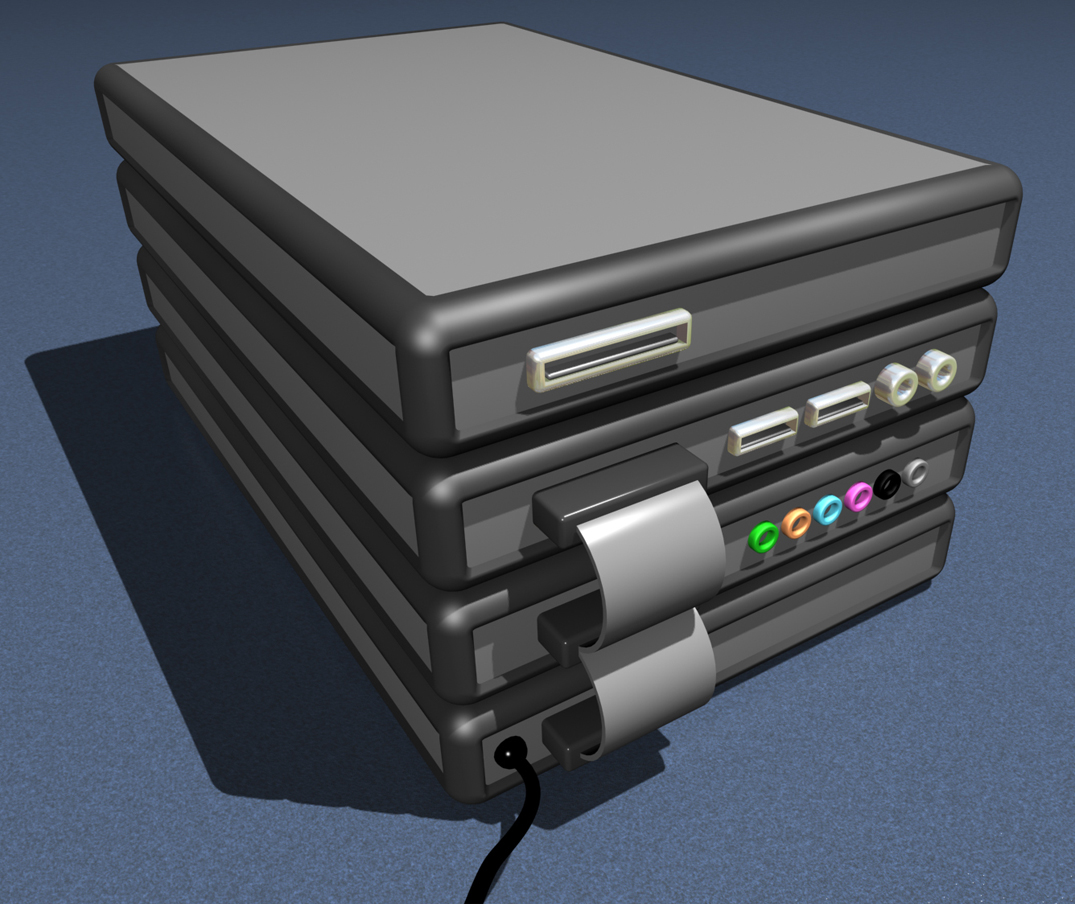

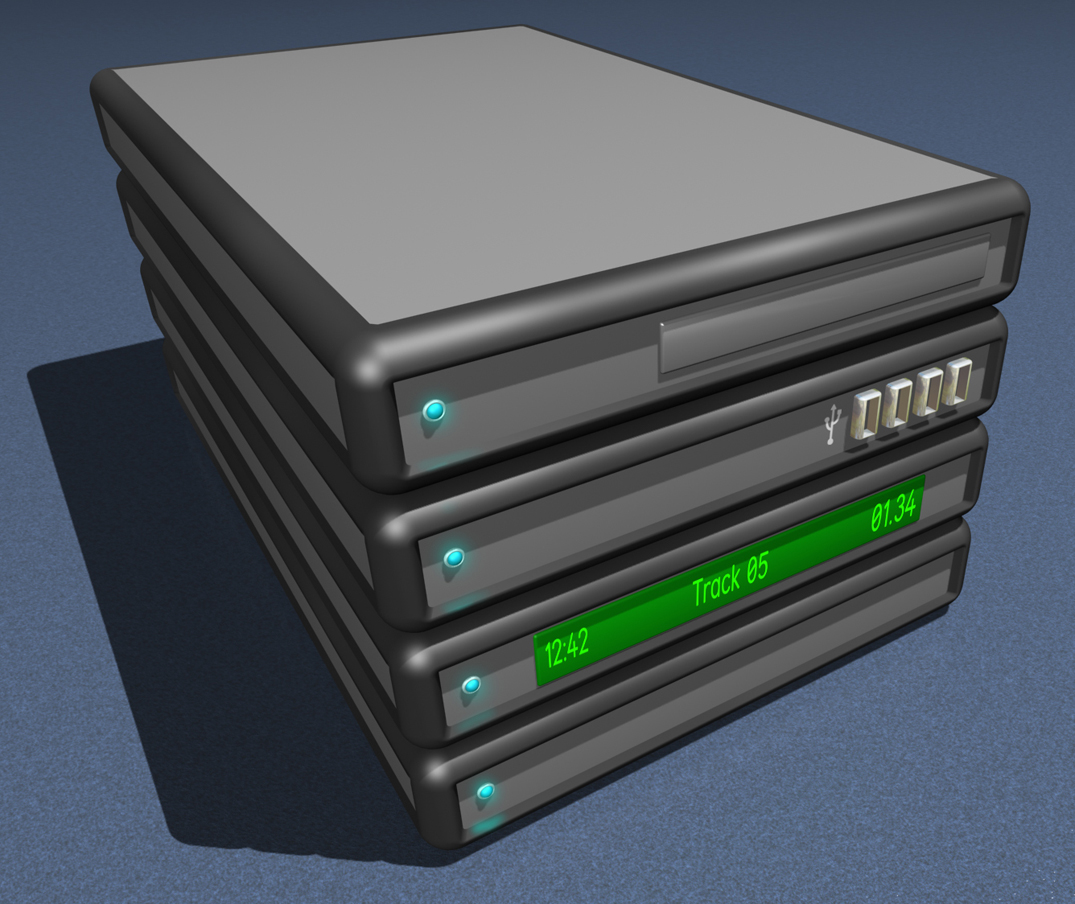

Figures 1 and 2 show front and back views of a mock-up of a distributed PC, mimicking somewhat the layout of a stacked hi-fi system. While this is a workable and pleasing configuration in itself there are literally endless permutations in size and stacking of the components. The figures also illustrate the potential problems with cabling. Only one power cable is shown in the diagram, but there could be one for every box in the system unless some “pass-through” mechanism is employed for the power (again employed in many hi-fi systems). The data cables (shown as ribbon cables in the diagram) would also need to be more flexible and thinner to allow for stacking of components in other orientations. Could the power and networking cables be combined without interference? The boxes shown are also much larger than they’d need to be, for many components it would be possible to achieve box sizes of 1-3 inches with SBC or the PC/104 modules available today. The front view also shows an LCD display listing an audio track playing on the box; this highlights another advantage in that certain parts of the system could be started up (i.e. just audio and storage) while the rest of the system is offline, allowing the user for example to play music or watch DVDs without starting the rest of the system.

So, we have a distributed PC consisting of several “simplified” PCs, each of them running on different hardware with different “conventional” operating systems running on each. Upgrading or modifying the machine is greatly simplified, device driver issues are less problematic and the user has more freedom in the “layout” of the hardware in that it is easier to separate out the parts they need near (removable drives, input device handlers) from the parts they can stick in a cupboard somewhere (application boxes, storage boxes). What about the software? How can such a system run current applications? Would we need a new programming model for such a system?

There are many possibilities for the software organisation on such a system. One obvious possibility is running a single Linux file system on the kernel box, with the devices mapped into the tree through the Virtual File System (VFS). The /dev portion of the tree would behave somewhat like NFS, with file operations being translated across the interconnect in the background. Handled correctly, this step alone would allow a lot of current software to work. An application requesting access to a file would do so via the kernel box, which could translate the filename into the correct location on another device, a bit of overhead but authentication and file system path walking would be occurring at this point anyway. Through the mapping process it would be possible to make different parts of a “single” file appear like different files spread throughout the file system. Virtual block devices are a good way to implement this, with a single file appearing as a full file system when mounted. This feature could be utilised to improve packaging of applications which are generally spread throughout the file system, I’ve always been of the belief that an application should consist of a single file or package (not the source, just the program). Things are just so much simpler that way (while I’m grumbling about that, I also think Unicode should contain some programmer specific characters; escaping quotes, brackets and other characters used in conventional English is both tedious, error prone and an overhead, wouldn’t it be great if programmers had their own delimiters which weren’t a subset of the textual data they usually manipulate?).

There is no shortage of research into multicomputer and distributed systems in general, again all very relevant

Software would also need to access the features of a device through function calls, both as part of the VFS and also for specific capabilities not covered by the common file model of the VFS. Because the API of each device, along with documentation, could easily be included as part of the devices “image” linking to and making use of a device’s API should be relatively simple, with stubs both in the application and the device shuttling data between them.

A programming model eminently suitable for a distributed system like this is that of tuple spaces, I won’t go into detail here, you can find many resources on Google under “tuple spaces”, “Linda” and “JavaSpaces”). Tuple spaces allow easy and safe exchange of data between parallel processes, and have been used already in JINI, a system for networking devices and services in a distributed manner pretty similar to what I’m proposing, and both Sun (who developed JINI) and Apache River (though they’ve just started) have covered much ground with the problems of distributed systems; most of their ideas and implementation would be directly relevant to this “project”. The client/server model, as used by web servers could also serve as a good basis for computing on this platform; Amoeba is an example of a current distributed OS which employs this methodology. There is no shortage of research into multicomputer and distributed systems in general, again all very relevant.

I’m being pretty terse on the subject of running software, partly because the final result would be achieved through a lot of research, planning and testing; mainly because I haven’t thought of specifics yet (important as they are!). The main issue is that applications (running on application boxes) have a nicer environment within which to run, free from device interruptions and the general instability that occurs when lots of high speed devices are active on a single parent board. I’m also confident that Linux can be made to run on anything, and this system is no exception. I’m currently writing more on the specific hardware and software organisation of a distributed PC, based on current research as well as my own thoughts; if I’m not battered too much for what I’m writing now I could maybe include this in a future article. Of course, if anyone wants to build one of these systems I’m happy to share all my thoughts! None of this is really new anyway; there’s been a lot of research and development in the area of distributed computing and networked devices, especially in recent years. Looking at sites such as linuxdevices.com shows there is a keen interest among many users to build their own devices, extending this idea into the bowels of a PC, and allowing efficient interoperation between such devices, seems quite natural. With the stability of the Linux kernel (as opposed to the chaos of distros and packaging), advances in networking and the desire for portable, easy to use devices from consumers I believe this is an idea whose time has come.

The platinum bullet

At this stage you may or may not be convinced of how this organisation will make computing easier. What you are more likely aware of is the disadvantages and obstacles faced by such a system. In my eyes, the three most difficult problems to overcome are cost, latency and cabling. There are, of course, many sticky issues revolving around distributed software, particularly timing and co-ordination; but I’ll gloss over those for the purposes of this article and discuss them in the follow-up.

The three most difficult problems to overcome are cost, latency and cabling

Obviously giving each device its own dedicated processor, ram and storage, as well as a nice box; is going to add quite a bit to the price of the device. Even if you only need a weak CPU and a small amount of RAM to drive the device (which is the case for most devices) there will still be a significant overhead, even before you consider that each device will need some kind of connectivity. For the device interconnect to work well we’re talking serious bandwidth and latency constraints, and these aren’t cheap by today’s standards (Infiniband switches can be very expensive!). Though this seems cataclysmic I really don’t think it’s a problem in the long term. There are already plenty of external devices around which work in a similar way and these are getting cheaper all the time. There are also the factors that it should be cheaper and simpler to develop hardware and drivers, which should help reduce costs, particularly if the system works well and a lot of users take it on. Tied in with cost are the issues of redevelopment. While I don’t think it would take too much effort to get a smooth running prototype up, there may have to be changes in current packages or some form of emulation layer to allow current software to run.

The second issue, latency, is also tied up quite strongly with cost. There are a few candidates around with low enough latency (microsecond latency is a must) and high enough bandwidth to move even large amounts of graphical data around fairly easily. The problem is that all of these technologies are currently only seen in High Performance Computing clusters (which this system “sort of” emulates, indeed, a lot of HPC would be relevant to this system) and cost a helluva lot. Again this is a matter of getting the technology into the mainstream to reduce costs. Maybe in ten years time it’ll be cheap enough! By then Windows will probably be installed in the brains of most of the population and it’ll be too late to save them.

Interconnecting several devices in a distributed system will involve a non-trivial amount of cabling, with each box requiring both power and networking cables as well as any device specific connectors. Reducing and/or hiding this, and making the boxes look pretty individually as well as when “combined” will be a major design challenge.

Another possible problem is resistance, people really don’t like change. The current hardware organisation has been around a long time and continues to serve us well, why should we rock the boat and gamble on an untested way of using our computers?

Integration of devices on a parent board was a practical decision at the time it was made, reducing the cost and size of PCs as well as allowing for good performance. With SBC (Single Board Computers), tiny embedded devices and cheap commodity hardware, none of the factors which forced us down this route still apply. Linux doesn’t dictate hardware and even though this system would give more freedom with regards to hardware it does require a change in thinking. A lot of work would also be needed to build this system (initially) and the benefits wouldn’t be immediately realisable in comparison to the cost. My main point though is that, once the basics are nailed down, what we’d have is an easy to use and flexible platform inclusive to all hardware (even more so than Linux is already). Interoperability would exist from the outset at the hardware level, making it much easier to build interoperability at the software level. With such a solid basis, usability and ease of development should not be far behind. The way things are going now it seems that current systems are evolving toward this idea anyway (though slowly and with a lot of accumulated baggage en-route). One of the first articles I read on FSM was how to build a DVD player using Linux; this is the kind of hacking I want this system to encourage.

Imagine a PC where all the hardware is hot-swappable, with drivers that are easily specified, modified and updated, even for the average user

Welcome to the world of tomorrow

With Linux we have an extremely stable and flexible kernel. It can run on most hardware already and can generally incorporate new hardware easily. The organisation of the hardware that it (and other operating systems) runs on however, forces a cascade effect, multiplying dependencies and complexities between device drivers and hence the software that uses those devices. The underlying file paradigm of Linux is a paragon of beauty and simplicity that is unfortunately being lost, with many distros now seemingly on a full-time mission of maintaining software packages and the dependencies between them.

Imagine a PC where all the hardware is hot-swappable, with drivers that are easily specified, modified and updated, even for the average user; a system where the underlying hardware organisation, rather than forcing everything into a tight web of easily broken dependencies, promotes modularity and interoperability from the ground-up. A system that can be upgraded infinitely and at will, as opposed to one which forces a cycle of both software and hardware updates on the user. A system truly owned by the user.

The Linux community is in a fantastic position to implement such a system, there isn’t any other single company or organisation in the world that has the collective knowledge, will or courage to embrace and develop such an idea. With the Kernel acting as a solid basis for many devices, along with the huge amount of community software (and experience) that can be leveraged in the building of devices, all the components needed for a fully distributed, Linux based PC are just “a change in thinking” away.

What would be the consequences if distributed PCs were to enter the mainstream? Who would benefit and who would suffer? Device manufacturers would definitely benefit from greatly reduced constraints as opposed to those currently encountered by internal PC devices. Users would have a lot more freedom with regards to their hardware and in many cases could build/modify their own devices if needs be, extending the idea of open source to the hardware level.

Large computer manufacturers (such as Dell) could stop releasing prebuilt systems with pre-installed operating systems and instead focus on selling devices with pre-installed drivers. This is a subtle but important distinction. One piece of broken hardware (or software) in a pre-built desktop system usually means a lot of talking with tech-support or the return of the complete system to the manufacturer to be tested and rebuilt. A single broken device can be diagnosed quickly for problems and is much less of a hassle to ship.

What would be the impact on the environment? Tough to say directly, but I suspect there would be far fewer “obsolete” PCs going into landfill. With per device cooling and power saving becoming more manageable in simplified devices things could be good (though not great, even the most energy efficient devices still damage the environment, especially when you multiply the number of users by their ever-growing range of power-consuming gadgets).

A single broken device can be diagnosed quickly for problems and is much less of a hassle to ship

Big operating system vendors could lose out big-time in a distributed Linux world. In some ways this is unfortunate, whether you like them or not those companies have made a huge contribution to computing in the last couple of decades, keeping pace with user demand while innovating along the way. On the other hand, such companies could start channelling their not inconsiderable resources into being a part of the solution rather than part of the problem. It might just be part of the Jerry Maguire-esque hallucination that inspired me to write this article, but I’d really love to see a standardised basis for networked devices that everyone would want to use. I think everyone wants that, it’s just a shame no-one can agree on how.