Companies seek to counter their competition in a variety of ways - pricing, packaging, branding, etc. There are a lot of options and any good product manager will know them well. One of the toughest situations to be in is that of competition, with the usual responses, when you are up against a competitor using the natural forces of the market place. Marketers refer to these tectonic shifts as “shocks”, because they are unusual events. They change the way the game is played, and once that change is made, there is usually no going back. The change is structural. Discerning whether the change is strategic and a market force, or just a tactical move by competitor can be difficult at first. The majority of the time change is gradual. It often takes hindsight to reveal it as a fundamental shift.

Open source is not a tactical move by some product company, to obtain some temporary advantage. It is a basic change in the way the users, most often software engineers, want to assemble their systems. It satisfies a hunger for changes to the way proprietary software has been used in projects.

The majority of the time change is gradual. It often takes hindsight to reveal it as a fundamental shift

Nor is open source a religious experience. Practical hybrid business models, which combine both open and proprietary elements, have already emerged and both coexist. Indeed they can compliment each other for quite a while. Competition will improve both models, and consequently improve those that employ a mix.

If the truth is to be told, the proprietary software companies have not quite figured out how to respond to the open source challenge to their business model. They are used to pitting product feature against product feature, or matching price with price. The process and the business models of open source are both completely different from their previous experiences. It’s not as easy to respond, in kind, with that type of situation. Plus market forces are rapidly shaping the open source market such that it is now becoming an accepted part of the landscape. It is no longer strange and different.

The purpose of this article is to explore how we have arrived at this point, and what steps along the way should have told us that this is where we would be, at this point in time. Large software companies that are proponents of only proprietary software are fighting against an extremely powerful opponent, the natural force of a market in transition.

Some background on market forces

We are a long way from 1776 when Adam Smith first published his groundbreaking book “The Wealth of Nations”. It became the basis of modern political economic understanding. Software and information technology (IT) may not have been a consideration when it was written but the basic rules still apply. The characteristics of software and IT are also enabling their own change, which reinforces the notion of the market being adaptive.

Quoting from Adam Smith’s book:

Every individual necessarily labors to render the annual revenue of the society as great as he can. He generally, indeed, neither intends to promote the public interest, nor knows how much he is promoting it. He intends only his own gain, and he is in this, as in many other cases, led by an invisible hand to promote an end which was no part of his intention. By pursuing his own interest he frequently promotes that of the society more effectually than when he really intends to promote it. I have never known much good done by those who affected to trade for the public good.

Adam Smith captured the essence of the market (and open source), i.e. self-interest by the consumer. This self-interest is the engine of the economy and competition its governor. He also mentioned the “invisible hand”. Just like the wind, we can see its effects but cannot quite view it at work.

Fundamentally:

- Adam Smith explained how prices are kept from ranging arbitrarily away from the actual cost of producing a good.

- Adam Smith explained how society can induce its producers of commodities to provide it with what it wants.

- Adam Smith pointed out why it is that high prices are in effect a self-curing disease, because they encourage production of the commodity to increase.

- Adam Smith accounted for a basic similarity of incomes at each level of the great producing strata of the nation. He described, in the mechanism of the market, a self-regulating system, which provides for society's orderly provision of goods and services. (_Comment: _This now applies in a global sense, and could be the basis of a similar article stating that the off-shoring advantages will not last long.)

Adam Smith captured the essence of the market (and open source), i.e. self-interest by the consumer. This self-interest is the engine of the economy and competition its governor

This article will focus this discussion on points 1 and 2 and how technology itself has caused open source to be a viable option for satisfying demand and getting the market in balance.

Price versus cost

Software is a peculiar product. Unlike physical goods (like cars and TVs), it requires very little in the way of consumable (physical) resources to produce. At one point it was taken from magnetic media, copied to another media (floppies, or tape) combined in a box with printed documentation (derived, itself, from electronic files), shrink-wrapped and shipped via trucks from warehouses. Not exactly an optimum distribution method for something that starts off as, and then ends up in, an electronic format. A format that should be able to distribute the product at the speed of light, and where the distance of delivery is not a cost factor.

Software should be an _optimum good_ in a connected world

Software should be an optimum good in a connected world.

In electronic format, software:

- Costs very little to store.

- Costs very little to deliver.

- Can easily meet a surge in demand.

- Offers almost zero latency between the act of selection and product reception.

- Offers replication at very little incremental cost and with no depletion of itself.

It fits well in a world of instant gratification. You see it, you want it... well you just got it!

Software itself costs labor to create and maintain. And then of course there is the cost of physical resources. There are computers, networking infrastructure, disk drives, and a building perhaps, for the developers to use. These are initial costs and once incurred the ongoing cost is often less.

Other significant costs, for traditional software products, are those of sales and marketing. Look at any high tech company and those are indeed significant, often being 40 to 60 per cent of revenues. Proprietary licensing models have to recover that cost quickly. The “cost of sales” is now an area where the costs incurred are seen as being of dubious value to the sophisticated client. Many companies now try to sell and deliver products over the internet as a way to use more of a “pull” model (with users buying), rather than the “push” model (of salespeople selling), to thus reduce their costs.

The point I’m trying to make here, is that there is often a big difference between software pricing and product cost. According to Adam Smith, that difference causes tension between buyer and seller. It also provides room for a competitor to insert their product at a new price point. As mentioned in the previous paragraph, the internet has enabled low cost ordering and delivery, by what is called disintermediation. That is, the elimination of the middle-men. This strategy drastically reduced selling costs, as long as your clientele were users of the internet and felt comfortable about buying products and services using it. With IT users that was, indeed, already the case.

It was apparent to the invisible hand of the market that the only new price point that could be employed successfully was that of zero. Anything else could be countered by short-term price cuts

When it came to software, Microsoft had entered the market at a lower price point than their predecessors such as the mini-computer and workstation manufacturers. This was predicated on the model of a PC being both low-powered and for a single user from a licensing perspective. The lower Microsoft price points, were more than compensated for by volume. (In fact for a while their lower prices made them heroes to IT managers who could use them to negotiate with their other vendors.) Products such as Microsoft’s SQL Server held the rest of the market (Oracle’s DB for example) in check. Microsoft’s product costs (packaging and distribution) were, and still are, minimal compared to their prices. In the past, if a competitor tried to attack them through lower cost pricing Microsoft could always heavily discount its price, give away parts of its product functionality, or use its access to a broad product range to bundle a product (which might be under market share pressure), in such a way that: a competitor making a discrete or narrower range of products could not respond in kind. Case in point: Netscape and the browser wars, or today, the spam filtering and virus blocking software being added to MS Windows, at “no additional cost”.

Initially the Microsoft proportion of the overall systems cost was low. As hardware prices retreated, under the assault of competition, the Microsoft prices were maintained, and their proportion of the overall cost increased.

It was apparent to the invisible hand of the market that the only new price point that could be employed successfully was that of zero. Anything else could be countered by short-term price cuts. A case of “how low can you go?” And Microsoft and Oracle have deeper pockets. The only way Microsoft and other large software vendors might be vulnerable was by “death from a thousand cuts”. That meant lots of small vendors nibbling away at their product lines by attacking across all fronts. A difficult proposition, as long as Microsoft had the operating system market to itself. It could always retreat its products into the safety of its OS. Plus switching costs, those that are incurred as users moved to new products, had to be offset by an overwhelming new value proposition.

Structurally the Microsoft business model is more dependent on license revenue. It has not yet switched to a service model. (Unlike say, IBM and its Global Services.) Hence the savageness of their counter-attacks to the whole notion of zero-cost licensing and open source. The key location now, on the value chain, for many companies and their software products is further downstream. After the delivery, it is the service that counts, as my local automobile dealership likes to say in its ads. (And my dealer now sells more than one brand of car!)

The solution stack

Customers don’t just buy software. They often need a “stack” to make it all work. In general they need hardware, an operating system, a communication mechanism, perhaps a storage solution (i.e. DBMS), and finally a purchased or in–house developed application suite. Stack control is something vendors, (both hardware and software), have wrestled over, with advantage shifting in the last decade to the operating systems vendors.

Stack control is something vendors, (both hardware and software), have wrestled over, with advantage shifting in the last decade to the operating systems vendors

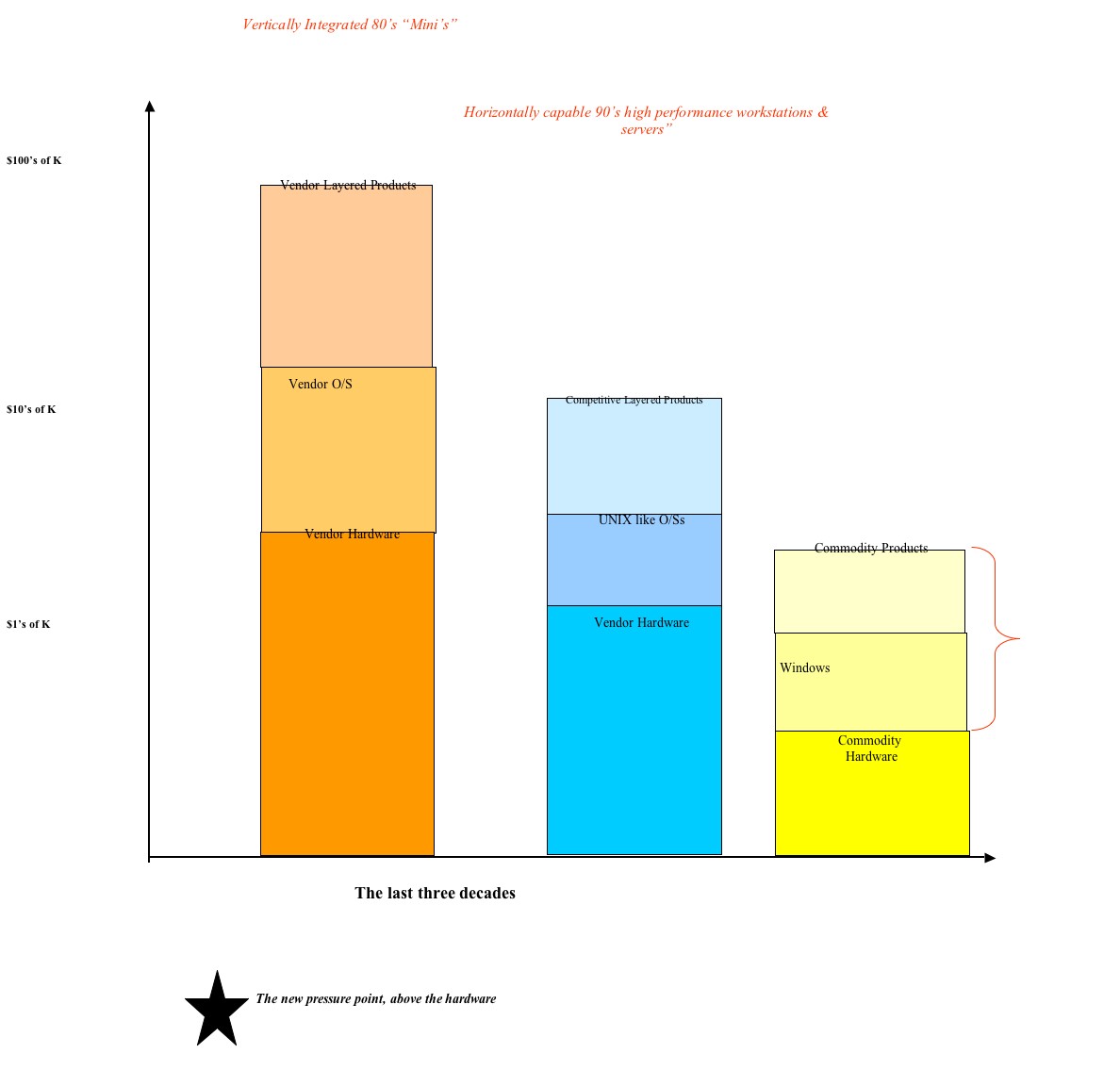

Readers of this article may be too young to know, but in the 80’s the mini-computer manufacturers (DEC, DG, Prime, etc) created a brand new market and competed with the much higher priced IBM mainframes. Their value proposition was a lower “cost of entry” for those companies that needed to use computers. They targeted the enterprise needs of medium sized businesses and the departmental level computing needs of the Fortune 1000 companies. They replicated the model of the mainframes and tried to provide vertically integrated solutions. (A solution in those days was likely to be FORTRAN on a VAX!) All users were trapped on the underlying hardware. This tight coupling enabled the vendors to move their margins around, using bundled prices. Compilers, development tools, even the operating systems were all priced attractively to enable clients to keep their high-priced hardware investment protected. Hardware services and upgrades were high-margin, cash cows.

Third-party software vendors often rounded out the solution stack, but even they were trapped. They had to minimize the range of supported platforms for their products, as their developer skills were not sufficiently portable. Understanding how important skill portability was, Oracle built a business model, trying to ensure that at least its users were masked from many of the variables of proprietary platforms, by creating a database product that was comparable from a developer viewpoint and yet spanned many platforms. But it was hard work. However, there was value for their clients and Oracle priced their product accordingly. By having Oracle, as part of the solution set, companies could demand better prices from the hardware vendors and more than offset the Oracle pricing premium.

The users, their third-party hardware vendors (disks, memory, printers, terminals etc.), and their third-party software vendors, all knew they were unable to grow and leverage competition, with the major system vendors locking in their customer base. Through pressure, and the role of IT standards, for both hardware and software, key pieces of the monolithic systems began to fracture. Vendors were forced to support both their own standards and public standards. Eventually public standards dominated selection criteria. Users forced vendors to remove margins from their hardware pricing. Luckily, manufacturing technology and VLSI had combined to create margins that could be reduced significantly. Lower pricing led to market growth. Additionally, the larger market, with more standards, and some commodity priced components, reduced the risk and entry cost for new competitors. With bigger pie, lower prices, more choice, the market was hard at work. Those who attempted to protect their market, shrunk. Those who exploited the new standards grew, albeit with reduced margins. Value was being created for the consumer.

With bigger pie, lower prices, more choice, the market was hard at work. Those who attempted to protect their market, shrunk. Those who exploited the new standards grew, albeit with reduced margins. Value was being created for the consumer

This article is about open source software, so let’s focus on some of the software aspects.

In the 90’s UNIX had transformed many third-party software and in house development teams. Thanks to Bell Labs there was now a multi-hardware, somewhat open, operating system. As implemented by the vendors it was still not quite a uniform operating system, but sufficient enough to encourage its use as a primary target. The consequence of this was that, operating system related skills now became more portable. Switching costs (amongst competing vendor UNIX versions) were now reduced for users. Switching software assets to new platforms was a reduced porting activity, as opposed to a major code rewrite. Third-party software developers could support more platforms for the same amount of effort. Vendors still tried to differentiate their O/Ss but they no longer had a lock. Price points were dropping for all products.

At this time a new phenomenon in software was also occurring. Universities and government research agencies were offering their code to other researchers and users for a nominal fee, usually the cost of the media and shipping. Such software usually ran on UNIX, (BSD UNIX was a preferred version), was unsupported, was licensed as “public domain” and expected a level of sophistication by the user if it was to be leveraged. Nevertheless the principle of sharing knowledge by sharing code had taken hold. This behaviour was reinforced by the hundreds of years of academic tradition in the sharing of and building on each other’s knowledge.

In the late 80’s IBM opened up the hardware architecture of PC and perhaps unwittingly not only created a new market, i.e. the desktop, but also offered hardware suppliers a level playing field in a growing market segment. The volume of PC component parts would now move hardware to its commodity phase. The third phase of the declining cost of systems was underway, and was now hardware driven. The OS was still a lock as far as Microsoft was concerned so they could leverage their position of power. They marketed their position in the middle as an island of stability, keeping hardware and software vendors in line with their APIs and compliance requirements. However in the 90’s as both hardware and layered software prices continued downwards, under competitive pressures, the Microsoft offerings became more visible as a larger percentage of the solution stack. Their position in the stack made them now a target. Even hardware vendors, who had grown from nowhere in the previous 10 years, resented that most of the revenue dollars for systems shipped now went to Microsoft, who seemed immune to pricing pressures.

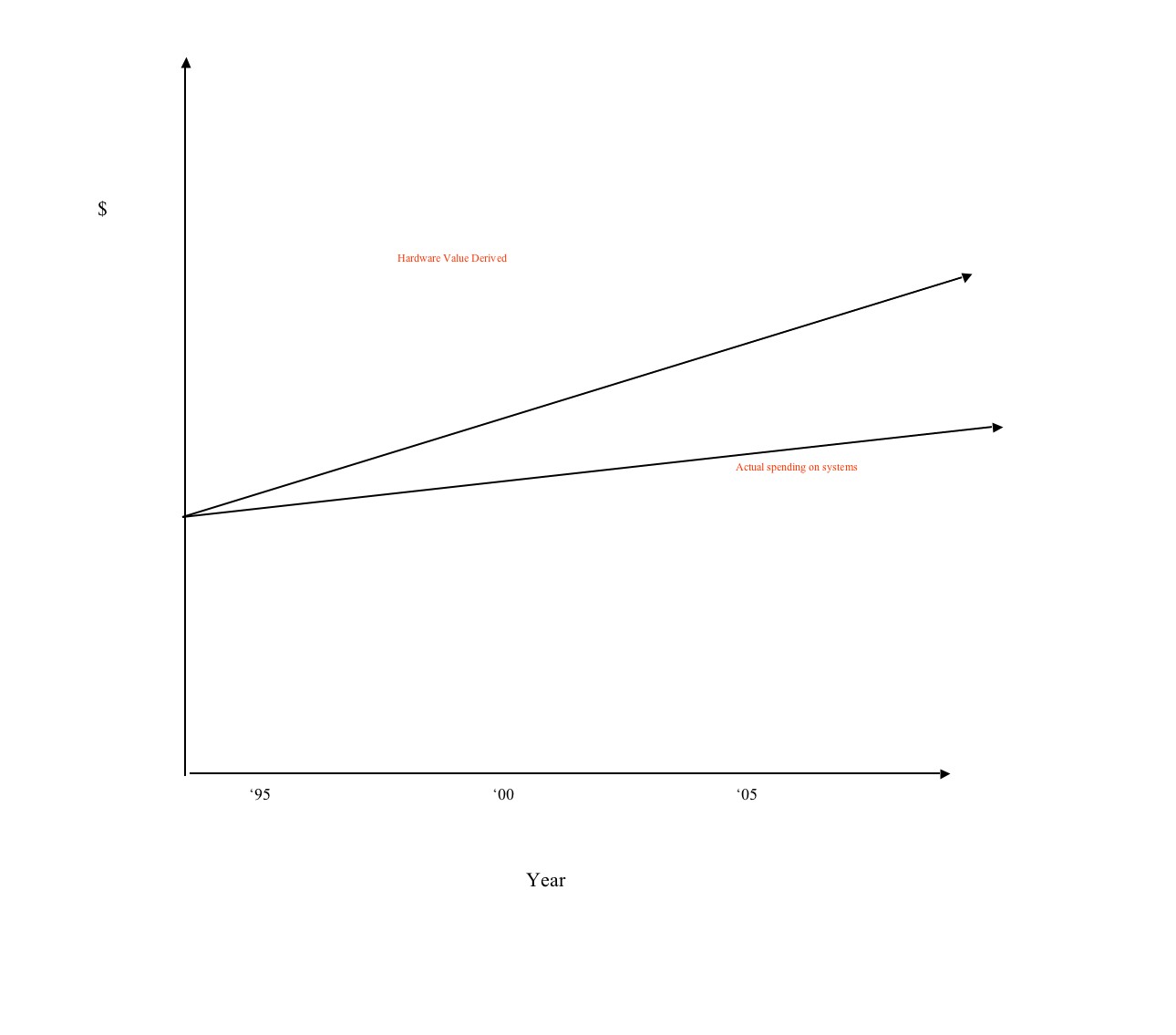

IT was still creating value, year after year. The US Bureau of Economic Statistics still showed tremendous value was being derived from the IT dollar, as shown below.

Their numbers were still demonstrating that since 1996 a 40% increase in spending had resulted in 96% improvements in systems capability. Nearly all the value was coming from manufacturing efficiencies in systems hardware including networking, products and services. (Networking value was coming about as the telecom industry was deregulated. Another seismic market shock!)

What would be the next big driver of value?

Windows NT was supposed to be the UNIX killer. But it did nothing of the kind. What’s more UNIX had transitioned and extended from vendor chips to running on hardware that previously had been Microsoft’s exclusive domain

Well for a start, the systems vendors now had some options. Windows NT was supposed to be the UNIX killer. But it did nothing of the kind. What’s more UNIX had transitioned and extended from vendor chips (IBM, SGI, Sun, Digital) to running on hardware that previously had been Microsoft’s exclusive domain, (known as Wintel). Sun’s “Solaris on Intel” had made a brief run at the Microsoft position on the Intel architecture, but suspicions about Sun’s long term commitment (as later justified by the brief time into its life when it appeared that it might be discontinued) and pricing model made it stall. SCO’s UNIX ran on the Intel architecture, but its pricing model seemed intended to compete with the likes on AIX on PPC, Solaris on SPARC, and Tru64 on Alpha. It was not a direct threat to the Microsoft price point. BSD UNIX had been around for a long time but seemed unable to get real market traction. It seemed as though something else was needed to give it that nudge over the top. Linux was just that. It was gathering momentum at the right time. It was new and fresh, without the AT & T history and intellectual property encumbrances, was open source, and it also had a lead developer in Linus Torvalds. He removed some of the anonymity of UNIX and gave it a face and a voice. He was somewhat unassuming compared with the boisterous and gregarious spokespeople of Microsoft, Oracle, Sun, HP etc. Software developers could identify with him.

Linux, combined with other open source layered products, was also creating its own solution stack. This was not a consumer oriented solution stack but was initially targeted at the software engineers assembling technical solutions and tailoring the stack. All this was facilitated by the internet and the low cost hardware resulting from the Wintel solution stack, which was forever demanding more memory, faster chips, and more storage. The irony was that systems that could no longer run the Wintel combo were finding new life with Linux. Linux and its apps were not as resource intensive. Linux could better protect a user’s hardware investments. In order to use Linux you did not need new hardware, you could deploy on systems that were being retired from Windows use. Linux was freeing up capital for other uses closer to the users needs.

In the meantime the ease with which open source projects could grow was now a force. The market was organizing an assault on the software stack. Below is a comparison of the old model of open source with today’s open source development model.

Old

- Share ware/freeware/Public Domain.

- Cost of Media – 1800 BPI tape.

- Unsupported - use at your risk.

- Government research – most likely 1st generation code.

- Government Projects (NASA) – useful only for narrow markets.

- University orientation – knowledge flowed freely (as long as you were referenced).

- Geographically tight teams – not yet a global village.

- Lacking in infrastructure - expensive resources and vendor donated equipment came with strings attached.

- Went stale quickly, once research funds ran out.

- Pretty much shunned by business.

New

- Business strategy by major players.

- Carefully licensed to ensure the code base grows.

- Professionally packaged / supported / frequent release cycles.

- Service oriented - value and cost shift back to user control.

- Sophisticated development teams who are also users.

- Infrastructure enabled.

This last point meant:

- Good & plentiful standards / lessons learned.

- Email, instant messaging, free machine cycles, self documenting tools.

- Newsgroups, SourceForge, Slashdot, virtual communities, access to experts.

- Fast reliable download sites, mirrors, easy network access, plenty of bandwidth.

- O’Reilly publishing.

It’s difficult for the vendors to attack open source products. You don’t win friends and clients by telling them they have an ugly child!

Scratching that Itch

Developers had always had itches. Now they had the collective means to scratch.

In regards to fighting back, the vendors were in a conundrum. The large proprietary software vendors were used to attacking their competitors (usually other vendors) with fear, uncertainty and doubt. When users, who were their customers, became collectively, their competitors, then things became a lot different. It’s difficult for the vendors to attack open source products. You don’t win friends and clients by telling them they have an ugly child! If we are a society that values ownership, then surely we should feel more ownership of our open source projects than in something we licensed. Licensing doesn’t convey a feeling of ownership. Quite the opposite, it is restrictive.

Furthermore, it wasn’t easy for proprietary vendors to compete once an open source product was in use at a company. Proprietary vendors need a very compelling value proposition for their products, in order to convince someone that something is so much better that they should now pay money for it. In essence, the competition point now shifted down the value chain to service and the cost of use. In that regards, Microsoft attacking Linux by focusing on total cost ownership (TCO) is the right approach. That’s the issue now. However, with Microsoft employing the wrong math it can boomerang. Conversely the open source community has to ensure it does not relax on its zero license cost laurels. Their products might be free to acquire, but have usage costs and have to earn their keep every day.

Companies deploying open source solutions will derive improved yields from their IT dollars. The major new value from information systems will come from leveraging software, because of its position in the stack. Hardware has very little left to give. Open source has lot to offer because of its low entry cost and zero cost of replication. Therefore the implementers who have the most to gain are those with large scale systems, i.e. those projects that would usually require large numbers of licenses and thus fees. Previously large scale users paid a penalty as they replicated the same solution across many systems. Now conversely, they can benefit the greatest by deploying widely, but paying only for the services they need, one time. They are scaling up the value; but scaling down the cost.

Ironically, once the decision to switch to an open source product is made, it behoves management to leverage it for as much value as they can. The community is now supporting it. Every home-grown application, or feature, that isn’t strategic to their business, that they can shift into the community, results in lower internal costs.

The new software market model

In summary, the market is moving more into equilibrium, between buyer and seller. In the past, the software vendor held the advantage. Only the vendors knew and understood their products. They delivered binaries and patented or copyrighted their product to preclude you from working with its internals.

Open source shifts the pricing power back to the users and shifts the value downstream to services

Open source shifts the pricing power back to the users and shifts the value downstream to services. The new market will react to the following drivers:

- Good software engineering knowledge and practices are becoming more widespread. People are no longer intimidated by technology.

- More standards, which will minimize overall risk. Most importantly though, they will emerge earlier in the technology cycle, where they can help more.

- More prevalent, well-documented, software patterns solving re-occurring problems. They will be modeled, coded and ready for use in public community groups. Public components will be the new piece-parts of software systems.

- OO software continues to dominate with its support for easy extensions to applications, because of its ability to mask what you do not need to know, and enable you to satisfy your unique needs.

Open source projects will attract more companies offering commercial support. Their pricing power will be held in check by: 1) Competition amongst the support community itself (newsgroups); 2) The ability of end users to support themselves, should they elect to do so; and 3) The emergence of tiered, open source support, priced above the free model of the newsgroup, i.e. low cost regional providers, and optional higher cost, higher quality, global providers.

Some observations on the last three items:

- Free support from the various communities is nice. It can get you going, but it’s not timely or predictable, and it’s often only one helpful person’s opinion. It may not be backed up by others and you have no way of qualifying the quality of their answers.

- Smart end users can support themselves. Once they invest the time. The first question the end user must ask is, “what is my internal cost per hour?” Followed by, “can a commercial support organization do it more time effectively and more cost effectively?” Finally by; “is this really the best use of my employee’s time?” Usually the answer on the second question is yes on both counts, or the commercial support organization does not have business model. It has to be better than you and faster than you. The customer has to be satisfied each and every time the support service is delivered, or the next time the customer will go elsewhere. The answer to the first question is Adam Smith telling us his first rule of open markets is still at work. Price is still closely aligned with cost.

- Open source can allow local suppliers to set up shop easily and offer lower cost local support options in the local language and currency. This mitigates some travel expenses, avoids the delays in expertise arriving on site, and encourages small companies, in limited geographies to try a locally supported but globally available open source product. It does not seem as risky. Larger global companies are prepared to pay for consistent quality, support in depth, and as a result they go with the perceived leaders in the support community. The leaders have to establish a global reputation. One negative comment in a newsgroup can have adverse consequences.

Open source can allow local suppliers to set up shop easily and offer lower cost local support options in the local language and currency

Conclusion

Open source is correcting the imbalance between costs and pricing in the market for widely used, standards based software, in well understood problem domains, and where there is little opportunity for differentiation. The open source genie is now out of the lamp. The opportunity for higher profits has shifted to more of a service based model. This was inevitable. Open source products will be dominant over the long haul in the volume market. Rapidly growing economies in Asia and South America will embrace and incorporate the lower cost model into their infrastructure. The volume of their adoptions alone will overwhelm other numbers. Proprietary vendors will find themselves in a niche market or will be scrambling to reinvent their business model. Just open-sourcing their products will not work. They need to understand where the opportunity in their installed base exists to add value, and where to concede value to the open source products.